Custom models for automating image and document processing

Levity enables companies to automate workflows specific to their business using models trained on-demand with custom data sets. Levity decided to adopt Valohai to manage their machine learning infrastructure & model serving through Kubernetes instead of hiring an MLOps engineer.

TL;DR

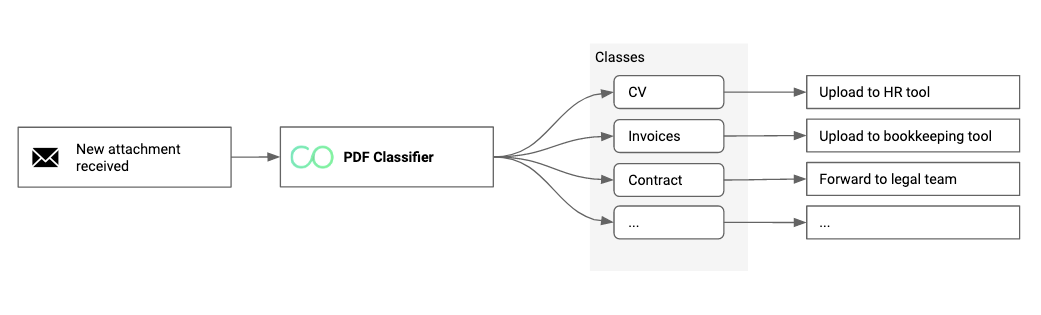

- Levity enables companies to automate workflows specific to their business, from recognizing objects in microscopic images to automatically categorizing incoming documents for different internal workflows.

- They needed a solution that automatically manages their machine learning infrastructure and model serving through their Kubernetes cluster on Google Cloud Platform.

- Levity started by building their own MLOps solution with Kubeflow but quickly realized that the costs associated with building, maintaining, and hiring the right talent to build your own MLOps solution were not financially sustainable.

- Today Levity can focus on building their platform and its integrations, leaving the hassle of maintaining the machine learning infrastructure and Kubernetes to Valohai.

Even with all the ready-made pieces we could use to build our solution, it just becomes an unreasonable budget and resourcing request to build and maintain our own custom MLOps solution.

Thilo Huellmann – CTO & Co-Founder at LevityLevity helps businesses build custom models for automating image and document processing

Every business has workflows that can be automated and every business is different. Using out of the box solutions, such as APIs to classify dogs and hot-dogs will take you only so far.

Our friends at Levity enable businesses to leverage their existing data and workflows to build custom classifiers. Their customers are using a series of pre-built integrations to connect their labeled data, train a new classifier and automate their manual PDF, image, or text classification workflows.

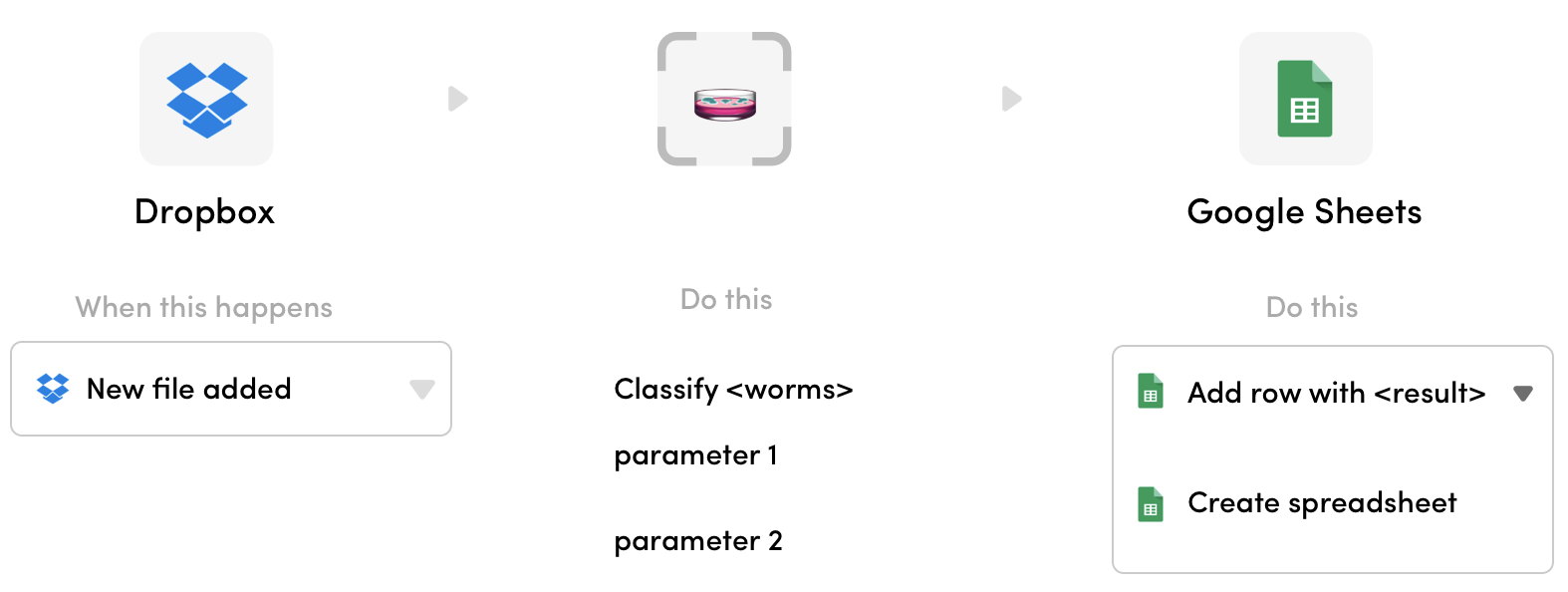

The power of Levity is in the fact that customers can use connectors to connect a large variety of data sources (e.g. Dropbox, Google Drive, Slack, Airtable), build a classifier, and output the results to a format they prefer (e.g. Google Sheets). For example, Vetevo leverages Levity to automatically detect worm eggs from microscopic images as a part of their veterinary services.

"We spent months building our MLOps solution - and didn’t end up anywhere"

Early on Levity knew they had to have a way to manage their machine learning pipeline. A solution to manage the Google Cloud Platform infrastructure used for model training, to versioning executions and deploying ready models for online prediction on their Kubernetes cluster.

They started building their own MLOps solution using KubeFlow and Google Kubernetes Engine (GKE) but it quickly became apparent that the financials didn’t make sense. The effort needed to build and maintain their own full MLOps solution, while building their product was not the right solution.

Thilo Huellmann, CTO and Co-Founder at Levity remembers the effort needed just to get models served through Kubernetes. They needed to decide on an API Gateway to serve their models, primarily looking into Ambassador and Kong. Then figure out how to create new types of resources with CRDs on Kubernetes, and on top of that interacting with Kubernetes - sending JSON, creating manifests, and defining pods. It becomes a lot of work for re-inventing the wheel.

It’s hard to find candidates with experience in Kubernetes, Kubeflow, and Deep Learning - and even if we found that unicorn it wouldn’t make any sense to have them spend 90% of their time reinventing the wheel.

Thilo Huellmann – CTO & Co-Founder at LevityComparing MLOps solutions on the market

Levity decided to look for an external solution built for MLOps, allowing them to focus on developing their platform. Their criteria for the solution was:

- Get up and running fast- Not having to jump through hoops to get their code to run on the service.

- No need to configure and manage the underlying infrastructure - They wanted to be able to their GPU and CPU machines from their GCP subscription and easily manage deployment versions on a Kubernetes cluster. While having the possibility to easily switch to any other environment (e.g. AWS, Azure) if the need arises.

- Support for a variety of frameworks - Having the freedom to choose, and change over time, the frameworks they use for training (e.g. TensorFlow, PyTorch), and model serving (e.g. FastAPI) without restrictions from the MLOps provider.

Automating machine learning pipelines with Valohai

Coming into Valohai Levity was looking to quickly get their existing projects to run on the Valohai platform using CPU and GPU machines from their GCP subscription.

Each Valohai customer receives a customized onboarding session containing:

- Support configuring the existing compute and storage resources to Valohai.

- A hands-on technical onboarding session to get up to speed with key platform features and get your project up and running on Valohai (with data inputs/outputs, versioning, parameter tuning, collecting & visualizing metrics).

- A dedicated channel (e.g. Slack, Teams) and regular catch up calls for technical support and sharing best practices.

Today Levity has automated their pipeline using Valohai APIs to trigger actions on Valohai, such as training a new model, evaluating results and managing endpoint deployments without having to worry about the infrastructure, Kubernetes configurations or even going to the Valohai app.

By hiring Valohai to take care of the MLOps side of the development, Levity was able to use their budget to focus on hiring engineers to work directly on the projects, instead of spending time finding multiple unicorn-hires to re-invent the MLOps wheel.

To hear more about how Levity utilizes Valohai, reach out to us.

To read more about Levity, visit www.levity.ai

Scale your ML development

Including

IncludingMistral 7B and YOLOv5

project templatesTake Valohai on a test drive

Valohai is the first and only CI/CD platform for Machine Learning that works seamlessly in hybrid-cloud environments.

Explore how you can shorten your ML development cycles without committing to one cloud provider or compromising on traceability and reproducibility.

Start your trial now